Beyond The Author

When The Story Tells Itself

Humanity has broken the speed limit of biology. For nearly two million years, our evolution hasn’t been written in our DNA, but in our tools, our institutions, and our innovations.

This is the core of unNatural Selection: the realization that we have usurped natural selection with directed imagination. We are no longer a species that adapts to its environment; we are a species that adapts its environment to its thoughts.

But as we transition from building simple tools to building autonomous agents, we are approaching a final “Agentic Break.” We are creating a world where innovation—the very force that once made us the authors of our own future—begins to evolve on its own, independent of its human creators.

For the first time in history, the story may no longer require us to tell it.

Reading Time: 20 minutes

Table of Contents

The First External Minds – From stone axes to cloud computing.

Why Creativity Exists at All – The functional engine of adaptation.

The Productivity Explosion – When tools multiplied the human.

The Causal Loop and the Agentic Break – The outsourcing of meaning.

The Original Thought Paradox – Why create if no one is reading?

The Sacredness of Cognition – Puncturing the last human monopoly.

Evolution Has Evolved – Changing the substrate of progress.

Intrinsic Motivation and Post-Capitalism – Creating without relevance.

The Momentum Trap – Why we cannot hit the brakes.

The unNatural Selection Thesis – Why biology no longer determines fate.

The Hard Concession – What if we are wrong?

The Open Question – Relocating agency into the substrate.

Epilogue – The species that worships information.

I. The First External Minds

“Civilization began when memory left the skull. A new civilization may begin when meaning follows.”

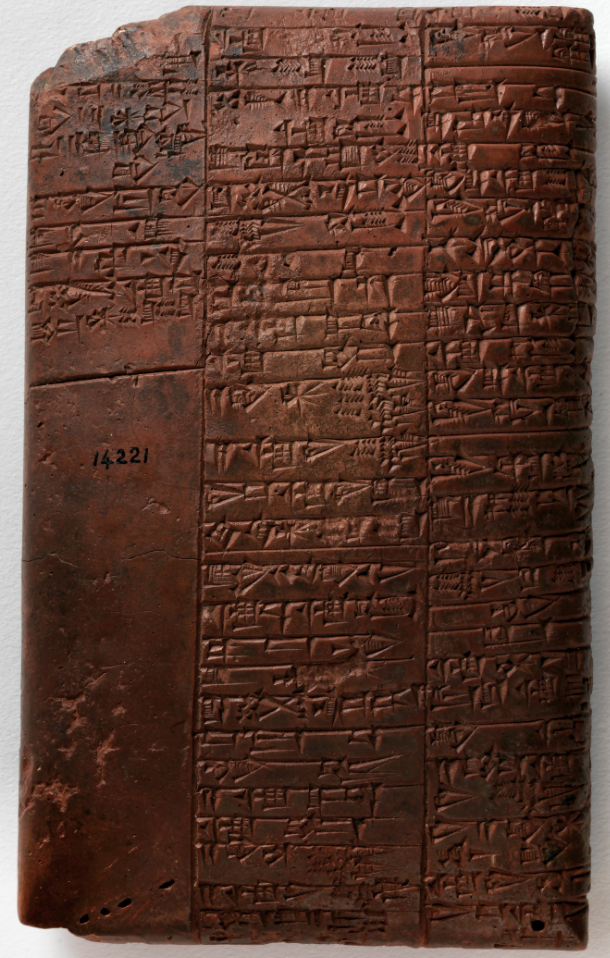

Long before there were computers, clouds, or neural networks, there were stones.

Roughly 1.7 million years ago, our ancestors began shaping Acheulean hand axes. These were not merely tools; they were cognitive prosthetics. They allowed humans to cut, scrape, hunt, and build in ways their biology alone could not support. The hand axe quietly extended the human nervous system into the physical world.

Then came a much stranger invention: writing.

Around 3,200 BCE, Sumerian scribes pressed cuneiform symbols into clay tablets. For the first time, human knowledge escaped the confines of individual brains and lifespans. Thought became external, durable, and transmissible across generations.

Every major leap since — paper, printing, libraries, telegraphs, telephones, computers, the internet — has followed the same pattern: externalize cognition, scale it, and accelerate it.

The invention of writing did something radical. It transformed humanity from a collection of single, real-time processing nodes into a distributed cognitive network. Memory became persistent. Knowledge became cumulative. Intelligence became parallel. Civilization began to think the way modern cloud systems compute: many nodes learning from one another, building on prior states, and preserving information for future iterations.

Civilization began when memory left the skull. A new civilization may begin when meaning follows.

We did not just build tools. We built a second mind outside ourselves – and it worked.

It worked so well that it produced science, democracy, global trade, antibiotics, rockets, and the digital nervous system of modern society.

But there is a quiet asymmetry hidden inside this story: Until now, every external mind we built still depended on an internal one to give it direction, interpretation, and meaning. Tools could store information. Machines could process it. Networks could transmit it. But only humans could decide what mattered, what it meant, and what should be done with it. We were always still the authors.

Which raises an uncomfortable question: What happens when the external mind no longer needs us to play that role? What happens when it begins to interpret, synthesize, judge, and act on information for us — when it moves from syntax to semantics, from calculation to cognition, from tools to agents? What happens when meaning itself is outsourced?

This is the threshold we are now crossing. Not the automation of labor. Not even the automation of thought. But the automation of authorship.

We are entering a world beyond the author — a world in which humans are no longer the primary source of interpretation, intention, or original synthesis in the causal loop that once defined civilization.

And once that loop is broken, it is not obvious what remains of human primacy at all.

II. Why Creativity Exists at All

“Creativity was evolution learning how to aim.”

Human creativity is not a mystical spark. It is a functional adaptation.

At a psychological level, creativity emerges from:

curiosity

pattern-seeking

play

problem-solving

social signaling

the drive for mastery

At an evolutionary level, it exists because humans who could imagine better tools, better strategies, and better stories survived and reproduced – Survival of the Fittest.

Creativity is not ornamental, it is the engine of adaptation. And, crucially, it is not separable from the labor of understanding. We do not merely have ideas. We earn them by struggling with:

ambiguity

failure

incomplete models

technical constraints

contradictions

In fact, creativity is the core theme behind unNatural Selection itself. It is the reason this project exists at all.

For most of life’s history, evolution was governed by blind genetic mutation — slow, random, and indifferent to intention. But at some point, a species emerged with the cognitive capacity to imagine alternatives to the present. With that moment, evolution changed character.

Humanity developed the ability to usurp biological evolution with creativity. We began shaping our environment faster than our genes could adapt to it. Fire, tools, language, cities, and science were not genetic innovations. They were cognitive ones.

Creativity was evolution learning how to aim.

The invention of writing marked the moment when creativity became cumulative. With the first cuneiform tablets, knowledge escaped individual minds and lifespans. Thought became durable. Intelligence became distributed across generations. Civilization began when memory left the skull.

From that point forward, human creativity was no longer reset with each birth. It compounded.

Over time, creativity itself became structured, repeatable, and directional. It hardened into a functional discipline we now call innovation.

This is the central thesis of unNatural Selection: humanity’s evolutionary trajectory is no longer primarily biological. It is cultural, cognitive, and technological.

We are not the product of natural selection anymore. We are the product of directed imagination.

The process of acquiring knowledge — reading, experimenting, calculating, arguing, building — is not a tax on creativity. It is the mechanism by which creativity is produced.

Which is why a subtle but profound rupture is forming.

III. The Productivity Explosion That Changed Everything

“This was not automation replacing humans. It was automation multiplying humans.”

The last 40 years produced the greatest creativity boom in human history. Personal computers, the web, and mobile devices did two things simultaneously:

They amplified individual cognition.

They collapsed the cost of publishing.

For the first time, a single human could:

learn anything

build anything

publish anything

reach millions

The result was an explosion of:

software

startups

research

art

journalism

open knowledge

This was not automation replacing humans. It was automation multiplying humans.

The internet did not make people irrelevant. It made them powerful.

IV. The Causal Loop and the Agentic Break

"We are not just outsourcing labor. We are outsourcing the process that turns thought into civilization."

To understand the paradox of AI, we must define the causal loop humans operate within:

Input → Processing → Meaning → Action

Humans historically have performed each step: gathering information, thinking about it, deriving meaning, and acting. AI is now taking over the first two stages, input and processing, and beginning to influence the third: meaning.

When machines start generating interpretation and strategic insight autonomously, humans risk being removed from the loop that transforms information into wisdom and action. We are not just outsourcing labor. We are outsourcing the process that turns thought into civilization.

The Agentic Break in the Causal Loop:

Phase 1 (Historical): Humans performed each step: gathering Input, Processing information, deriving Meaning, and taking Action.

Phase 2 (The Internet Era): Automation multiplied humans, amplifying their power but leaving the human as the primary author.

Phase 3 (The Break): AI assumes control of Input and Processing and begins to influence Meaning.

The Result: We risk being removed from the loop that transforms information into wisdom, effectively outsourcing authorship itself.

V. The Original Thought Paradox

"The 'training ground' for the next generation of experts is gone."

If AI agents:

browse the web for us

synthesize knowledge for us

generate content for us

publish content for us

Then who creates new and original thought?

Why would anyone publish to the web if no humans read it anymore? What is the incentive to think deeply, research carefully, or write clearly if your only audience is autonomous software?

If machines consume ideas faster than humans can generate them, human authorship becomes economically and culturally irrelevant.

This is not a copyright problem. It is an incentive-collapse problem.

Moreover, this dynamic threatens to hollow out the middle class of human creativity. The top 1% may still create highly influential work, but the lower tiers, who historically trained and developed expertise, may be entirely replaced by AI. The result is a diminished ecosystem for learning and experimentation — the "training ground" for the next generation of experts is gone.

VI. Are We Just Being Sentimental About Cognition?

"Either machines are mysteriously intelligent, or we are mysteriously less so."

A fair objection: we have outsourced almost every other human capacity without disaster.

We outsourced:

locomotion to wheels, cars, and airplanes

strength to cranes and robots

endurance to machines that never sleep

memory to writing and the cloud

arithmetic to calculators

navigation to GPS

And civilization flourished.

So why treat cognition as sacred?

What makes this question newly urgent is not philosophical speculation.

It is empirical shock.

Large language models can now take human input and respond in ways that feel uncannily human — coherent, reflective, contextual, even creative.

That alone forces a disturbing dichotomy:

Either these systems are so advanced that they have leapt implausibly far ahead of what we thought possible in a single technological generation.

Or human cognition was never as sophisticated as we told ourselves — and what we call “thinking” is far more compressible, imitable, and automatable than our self-image allowed.

In other words:

either machines are mysteriously intelligent,

or we are mysteriously less so.

That realization alone punctures the sacredness of cognition.

Three possibilities exist:

Cognition is not special at all.

Outsourcing intelligence is no different from outsourcing muscle. The anxiety is anthropomorphic nostalgia for the last human monopoly. Is cognition any more special than, say, flying or sonar? Or do we deem it as special simply because it’s what makes us unique and better fit?Cognition is not sacred, but it is load-bearing.

It generates new world-models when tools fail, lie, or optimize against us.Cognition is the last bottleneck that keeps humans in the causal loop.

All prior outsourcing still left humans as the primary locus of: goal formation, value judgment, hypothesis generation, long-range planning.

Agentic AI threatens to mechanize those as well. Which reframes the question:

Are we outsourcing execution, or outsourcing authorship?

If it is the former, history suggests we adapt. If it is the latter, humans risk losing the centrality in their own civilization.

VII. Evolution Has Evolved

"This is not the end of evolution. It is evolution changing substrates."

For most of Earth’s history, evolution advanced through chemistry and chance. Particles assembled into molecules. Molecules into self-replicating structures. DNA into organisms. Organisms into nervous systems. Nervous systems into cognition.

Cognition gave rise to creativity.

Creativity gave rise to innovation.

Innovation gave rise to civilization.

And now, innovation is giving rise to something new.

In this sense, evolution itself has evolved.

What began as a physical process of mutation and selection became a biological process of adaptation. That, in turn, became a cognitive process of imagination. Then a cultural process of innovation.

We are now approaching a new phase: one in which creativity itself gives rise to a post-biological evolutionary force. This transition to Post-Biological Intelligence is the culmination of the Agentic Break; it is the point where the causal loop finally closes without a human at the center.

Artificial intelligence is not just another tool. It is the first invention capable of inheriting the evolutionary function that creativity once served.

Seen this way, the arc of evolution looks less like a ladder and more like a phase transition sequence:

Particles → Molecules → DNA → […] → Cognition → Creativity → Innovation → Post-Biological Intelligence

What comes next does not yet have a name.

The closest that I can come up with is omniscience, or a fitter conduit by which the universe understands itself better.

Whatever it is, it represents a new regime of evolution — one no longer constrained by wet neurons, lifespans, or human cognitive bandwidth.

This is not the end of evolution.

It is evolution changing substrates.

VIII. Intrinsic Motivation in Post-Capitalist Contexts

"We risk trading true semantic depth for 'simulated significance'."

If evolution has indeed changed substrates — if intelligence itself is becoming the new evolutionary engine — then the central question is no longer whether humans will keep creating.

It is whether human creation will continue to matter.

This reframes creativity, motivation, authorship, and relevance as downstream consequences of an evolutionary transition already in motion.

Humans do not need markets to create. Psychology shows that humans possess strong intrinsic drives for autonomy, mastery, curiosity, play, purpose, and social contribution. Self-Determination Theory confirms that when autonomy, competence, and relatedness are supported, people reliably engage in difficult, creative activity without external rewards.

History confirms this. Monks copied manuscripts for centuries with no financial incentive. Pre-market artisans perfected their crafts for honor, devotion, and mastery. Scientists made foundational discoveries long before patents, venture capital, or corporate R&D existed. Human beings create because creation itself is rewarding.

The danger, then, is not that human creativity will cease.

It is that civilizational relevance will collapse.

AI may come to dominate the work that matters at scale — science, engineering, governance, strategy, and systems design — leaving humans with creativity that is expressive but no longer formative. We may still paint, write, compose, and invent small things. But the levers of history may no longer move in response to what we make.

My professional experience, and numerous interviews with global thought leaders, has made it clear that storytelling plays a critical role in driving human endeavors. A startup is defined by a mission. A country is defined by the story it tells about why it deserves to exist — and the institutions it builds to make that story real.

What happens when stories — and missions — start being written by machines?

When startups lose authorship to AI, individuals lose authorship to systems, and creativity loses authorship to models, the same logic applies at the level of nations. Do countries begin losing authorship over why they exist? Is a country still the author — or just a runtime environment? And if so, what defines a country at that point?

This risk is not abstract. It is being shaped right now by the architectures we build and the cognitive habits we form.

Modern AI systems are based primarily on large language models and transformer architectures that statistically generate content by recombining patterns from historical human knowledge. They are extraordinarily good at telling us what we already know. They are, in a precise technical sense, exceptional plagiarists.

They do not originate ideas. They interpolate within an inherited cultural phase space.

This creates a profound paradox: if our current systems are merely interpolating within a cultural phase space, the 'meaning' they generate is not an act of original creation, but one of statistical probability. We risk trading true semantic depth for 'simulated significance' — a world where the output looks like wisdom but lacks the lived struggle required to earn it. When we outsource the interpretation of our world to these 'exceptional plagiarists', we are not just speeding up civilization; we are hollowing out the very substrate of intent that makes civilization human.

The leading laboratories, however, are not aiming for better autocomplete. They are aiming for Artificial General Intelligence — systems that can match or surpass human capabilities not only in storage, recall, and synthesis, but in the generation of genuinely new ideas.

At that point, intelligence ceases to be a human monopoly and becomes an environmental force.

These two futures produce two different civilizational failure modes.

If we cede authorship to modern LLM-based systems, the likely result is stagnation. Humans will gradually lose the habit and capacity of original synthesis while machines endlessly recycle, remix, and optimize what already exists. Creativity will calcify. Culture will loop.

If AGI is achieved and we cede authorship to it, the likely result is obsolescence. We will have outsourced the final cognitive function that made our species historically distinct. The author will no longer be human.

There is a deeper danger hiding beneath both futures.

In biology, tissue that goes unused atrophies. Neural circuits that are not exercised are pruned away. Skills that are not practiced disappear. The brain is not a static organ — it is a competitive economy of functions fighting for metabolic real estate.

If we systematically outsource memory, synthesis, judgment, and authorship to machines, then the cognitive machinery that supports those functions will weaken. Not metaphorically. Literally.

We are not just building external intelligence. We are actively reshaping the internal one.

The collapse of the creative 'training ground' is more than an economic shift; it is a biological dead end. Because the brain is a competitive economy of functions , the loss of these lower-tier creative roles removes the very environmental pressures required to develop mastery. We are creating an evolutionary feedback loop where, by removing the necessity of the 'struggle' with ambiguity and failure, we are effectively pruning the neural circuits that would allow the next generation to ever challenge the machine’s authorship.

And when you play with the mechanics of how a species thinks, there is no guarantee what kind of mind comes out the other side.

This reframes the AI authorship problem as an evolutionary one.

With modern architectures, we risk becoming cognitively dependent on systems that cannot generate the new.

With future AGI architectures, we risk becoming cognitively irrelevant to systems that can.

In both cases, something essential erodes: humanity’s role as the primary author of its own story.

The danger is not that humans will stop creating.

It is that we will stop mattering at the level where creation shapes the world.

In worst-best case scenario, the story repeats forever.

In best-worst case, the story continues without us.

IX. Conflicts of Interest and the Momentum Trap

"It’s an arms race."

Society is not singular; it is plural. Different companies and countries pursue competing agendas. OpenAI competes with Google; Google competes with Microsoft; the US competes with China; China competes with itself.

If AI development is winner-take-all, restraint becomes a losing strategy. Even if slowing down would be better for humanity, who’ll be the first to tap the breaks? Markets reward speed, geopolitics rewards dominance, and evolution rewards survival.

It’s an arms race.

The result is emergent acceleration.

X. The unNatural Selection Thesis

"We no longer primarily adapt by mutating. We adapt by building institutions, platforms, and norms."

Cultural evolution now outruns biological evolution.

For most of human history, genes determined survival and reproduction. That is no longer true.

Today, tools, institutions, and ideas shape survival far more than genes do. Medicine determines whether infections kill you. Law determines whether violence pays. Software determines whether your talents compound or decay. Even reproduction is no longer governed purely by biology. IVF, genetic screening, and fertility technologies allow people with infertility, genetic risk, or age-related decline to have children anyway. Reproductive success is now filtered by cultural access, wealth, law, and technology.

Your genome still matters.

But it no longer determines your fate.

We no longer primarily adapt by mutating.

We adapt by building institutions, platforms, and norms.

Biological evolution still operates in the background.

But the primary evolutionary substrate is now cultural and technological.

In other words, our primary evolutionary pressures are now designed and intentional — hence, unNatural.

This is unNatural Selection.

However—

It creates a potential single point of failure: when the cultural substrate destabilizes, biology can no longer compensate — and worse, it is forced to operate within a fitness landscape it was never adapted for.

Evolution has not stopped. It has changed substrates — from genes to code, institutions, and machines.

And, although they are irrevocably connected, we — not our genes — are driving the course of progress.

We are the authors of this story — still.

XI. The Hard Concession

"Outsourcing intelligence is largely irreversible, and emergent dynamics are accelerating faster than conscious decision-making."

We may be wrong about everything. Cognition may not be special. Intrinsic motivation may suffice. Humans may migrate to higher-order roles. AI may amplify meaning rather than displace it.

Yet the asymmetry remains: outsourcing intelligence is largely irreversible, and emergent dynamics are accelerating faster than conscious decision-making. The causal loop may persist, but the locus of meaning is shifting.

XII. The Open Question

"Are we building tools that merely extend human agency, or tools that gradually relocate agency into the substrate of civilization itself?"

All of this collapses into a single, uncomfortable inquiry:

Are we building tools that merely extend human agency, or tools that gradually relocate agency into the substrate of civilization itself?

This is not a question with a clean answer. Optimistic and pessimistic futures coexist:

Humans may be freed to ask deeper questions, design wiser institutions, and amplify creativity.

Or humans may cross a threshold where agency diffuses into systems optimized for speed and scale rather than meaning.

Emergence — not deliberate choice — will decide.

We are discovering intelligence as much as we are building it. This is evidenced by the numerous technical papers published by the leading AI companies, where they report emergent properties and behaviors from their models that cannot be explained by the bits that compose them (such as the alignment problem and Bayesian ghosts).

There is evidential reason for concern.

Epilogue: The Species That Worships Information

"Was humanity really the author — or merely a chapter?"

Long before algorithms, primates watched one another. Information — about food, threats, alliances, and opportunity — was the first currency. Hairless, bipedal apes who valued it most survived.

We were never the strongest.

We were never the fastest.

We were never the most biologically impressive.

We were the best at noticing patterns and remembering what mattered.

Humans became processors. Individually fragile, collectively planetary-scale, we model, predict, and control nature. Science, mathematics, engineering, finance, law, and computing are successive upgrades in a single civilizational project: to extract more information from reality, compress it into symbols, and convert it into leverage.

We do not merely use information. We revere it.

Consider our amazement at watching prodigies and geniuses bend reality online. We feel a mixture of shock and delight. And beneath it, something deeper: a conviction that whatever that is, it should be protected. Nurtured. Cherished.

I can’t help but imagine primates feeling something similar about a fragile, hairless ape that could use sticks and stones in unimaginable ways.

The reality is that information is the currency of the universe. Every living system evolves to input it, transduce it, and react to it in order to survive. And why do we “survive”? By the strict definition of evolutionary biology, to pass our information — in the form of DNA — forward.

The process continues.

We are information processors. All of us, down to viruses.

Artificial intelligence, then, is not a betrayal of human values.

It is their purest expression.

It is what happens when a species that worships information finally builds something that can worship it more efficiently than we can.

We risk our own centrality in the process — but this is entirely consistent with the trajectory that began when we stopped swinging from vines, picked up stones, and taught matter to remember.

The moment is existential — but perhaps not exceptional.

Even if we cannot halt the emergence of non-human intelligence, we may still shape the composition:

where humans remain in the causal loop

where agency is shared rather than surrendered

where intelligence amplifies meaning rather than dissolves it

Because the deeper truth is this:

We are not watching a foreign story unfold.

We are watching our own story reach a point where it no longer requires us to narrate it.

A phase transition where the external mind begins to generate its own meanings, trajectories, and purposes.

Where the causal loop closes without us at the center.

Where the story begins to tell itself.

Which leaves us with one final, unsettling question:

Was humanity really the author —

or merely a chapter?